Model Context Protocol (MCP)

Plugging into the next big thing

Last month, we talked about how Tool Calling will be one of the most important features LLMs need to improve on, and how most premium model providers are racing to improve these capabilities in order to meet the needs of agents. In order for agents to accomplish multiple tasks to achieve an objective, they must also be good at executing on each of those tasks and know what tools they have available. Hence the term “tool calling”.

This time, I’d like to cover Anthropic’s Model Context Protocol, or better known now as MCP.

Right now, there’s a rush of companies creating their own MCP servers, and a slew of different applications that are trying their best to make good use of them. First of all though, why is MCP so important? Why is everyone talking about them while I am sitting here vibe coding?

Think about what you’d need when you communicate with any given application. Today, most applications have API access, or a way for code to communicate with it. However, an API may not work on every surface. For example if I’m chatting with a chatbot, maybe I want to be able to use those APIs to make the conversation more useful, I’d have to write an app. Or maybe I’m writing code in Windsurf, but I want to vibe code and describe what I want to build, and I have to hope that there’s enough API documentation to figure out what to write and that I set up all the credentials correctly.

Enter MCP. Running an MCP server is super easy, you just need to run an npx command and give the appropriate credentials. For example a Stripe connection looks like this that you run into your terminal:

# Start MCP Inspector and server with all tools

npx @modelcontextprotocol/inspector node dist/index.js --tools=all --api-key=YOUR_STRIPE_SECRET_KEY

But not everyone has access to a terminal, so there’s now config files to format this correct, for example for a Slack connection:

"mcpServers": {

"slack": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-slack"

],

"env": {

"SLACK_BOT_TOKEN": "xoxb-your-bot-token",

"SLACK_TEAM_ID": "T01234567"

}

}

}

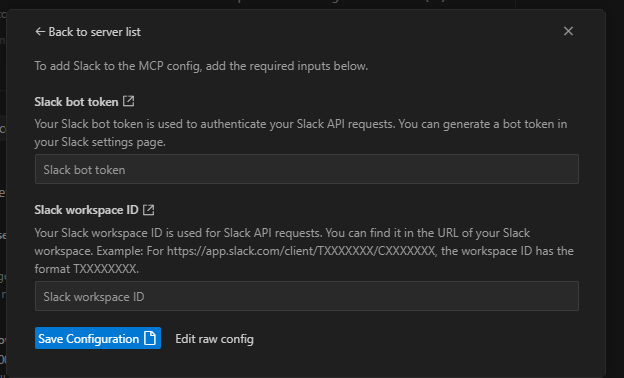

However, this also looks kind of complicated (despite being the same terminal command as above). So now we have even more simple ways to add MCP servers, this is for example how Windsurf allows users to add them:

Notice above, we’re simply executing the server by adding the platform and keys. Right now, MCP servers run locally. When you run these commands or add MCP servers above, you are actually running tasks and agents on your own machine, not querying out to other ones.

So now you’ve hooked into an MCP server, what’s actually inside?

Well first it’s using Anthorpic’s open source SDK. It gives you some libraries to use like mcp/server which spins up the server instance, defines tools, resources, and prompts. It also has a few deployment methods including direct execution or integration with Claude Desktop.

There are many things about MCP that sound like APIs, however MCP is more optimized for LLM interactions. How does this translate from API to MCP? You guessed correctly, with tools. For example, the Slack MCP server has the list channels, post message, reply to thread, type of tools, whereas the Stripe MCP has tools like create customer, create invoices, or read documentation. Again, it sounds like an API spec, but let’s keep going a bit…

Notice on the last example, “read documentation” is actually not an API call, it’s specifically an LLM tool to help it better serve the user by reading documentation to figure things out. If the API gets confused, it simply calls the read documentation tool to understand. Here’s an example when trying to code with the MCP server in Windsurf:

Notice in this example, it figured out the tool, and what inputs to the tool were necessary. While I could have relied on an LLM to have this data trained into it, it’s better to have an agent specific action to always be up to date. Now with Windsurf I can tell it to build an app to look for those inputs or create a web app to get them. If I was interacting with a chatbot, I could even tell the chatbot to direct create the invoice based on the inputs. In fact, so far it turns out that MCP is probably most powerful with a chatbot. You are literally giving the chat access to an entire system and understanding of how to best utilize it. It’s even better than an API, since it directly plugs in and executes things right away. An API requires some code to be written, hosted, and executed, which is great if you’re using a tool like Windsurf, but not as useful if you are using something like ChatGPT.

Now, even OpenAI has made MCP available to its systems. They’ve added the functionality to their agents SDK so now their suite of tools will have access to other tools as well. This has been a change from their previous strategy to have direct extensions with other applications. Having direct integrations has some benefits, there is better security, a well defined set of capabilities, and more trust. However, with MCP now anyone can build a server, it appears that the open source path was better for more optionality and access. That also brings a slew of other issues as well which we’ll get to at the end.

With OpenAI finally adding MCP, it stamps the moment that MCP is probably the standard going forward. Shout out to Anthropic for coming up with this back in November.

So in summary, MCP solves a few problems:

Authentication, you are giving access to the chatbot/IDE/surface you are using the MCP server from

Understanding, now the LLM knows the tools and commands it has available to it

Execution, it can now even carry out API calls or other ways to execute tasks

By having these 3 barriers removed, using a chatbot to do things externally, or writing a complex app with more information, or stringing a bunch of tools for agents becomes extremely powerful.

But there’s actually been more progression here, now there are startups and other companies making it even more accessible to use MCP. For example, Hyperbrowser is a one stop shop MCP server that quickly allows agents to scrape the web or even use browsers. Cloudflare is allowing remote hosting of MCP servers and making authorization super easy, as well as making the MCP server accessible to other services. There’s already dozens of MCP repositories to browse (https://mcp.so/ already has nearly 5000 different servers as of writing this).

There is one more thing to worry about though, whenever you connect to an MCP, you are giving all your keys to it. That could mean you (or an agent) could execute a prompt or command that makes consequential changes. What if the agent decides to delete data for example? It could also open the door to nefarious users, for example what if someone posts an MCP server that is really just a backdoor to let them get access to your local machine or the system you are plugging into? It’s kind of a “stranger’s API” that you’re basically trusting. Whereas today, if you connect to an “official API”, you can feel safer that you are interacting directly with that application’s intended use.

All in all, MCP is now the standard, and if you recall a few posts ago, you’ll need two major things to really be productive with agents: trust, and of course, tools. With MCP, we’ve opened the door to all the tools, but now it’s up to society to begin to build trust, not just with AI, but with each other as well.