While the AI industry argued whether Open Source could catch up to Close Source, another forgotten argument popped up in January, which was whether China could catch up to the United States. In both these questions, the answer was yes.

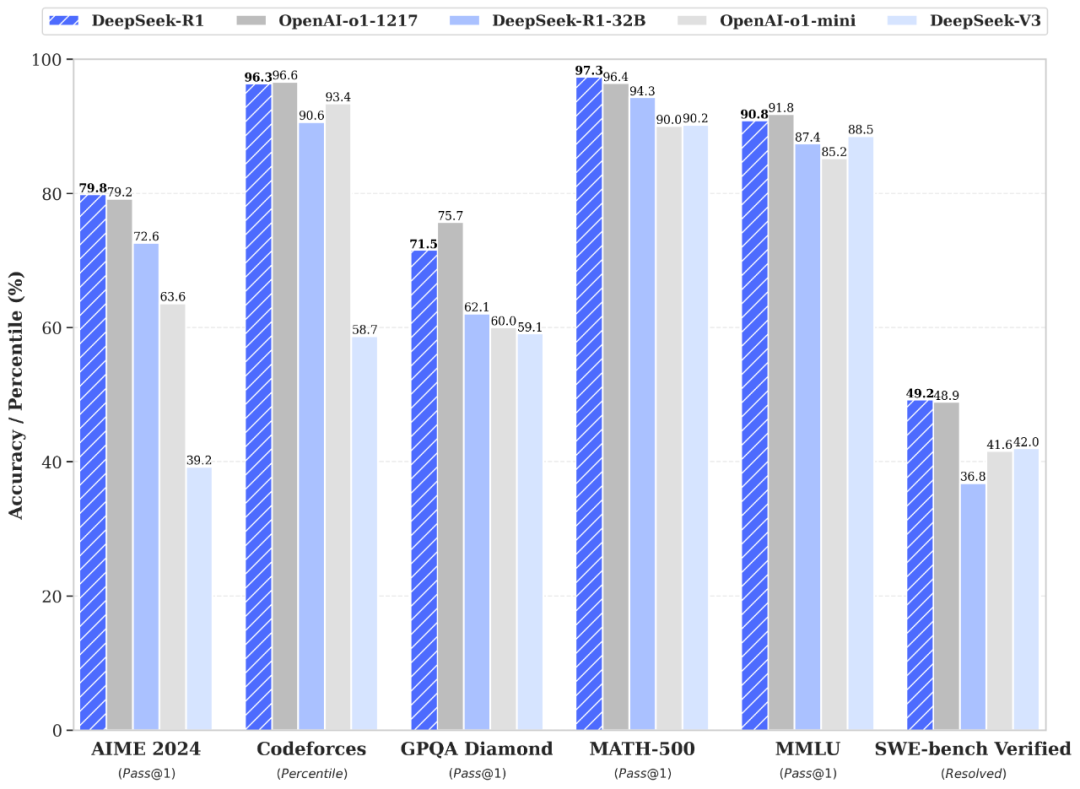

DeepSeek released R1, which brought an o1 level model to market for anyone to use for themselves. Take a look at some of the benchmarks, it blows past V3 (which was already beating 4o and Sonnet in some areas), and is on par with o1 (which is the current best model until o3 comes out):

Even more shocking was the cost to run the model was 30x cheaper ($2 vs. $60 output). Remember, OpenAI said that the $200 a month subscription to o1 was still losing them money. The reason DeepSeek is able to keep costs down is because it is actually only using 37B of activated parameters at a time, it is a mixture of experts model (MoE) with 671B total parameters but can run very fast as a result. Just for kicks, they even distilled some of the smaller Qwen and Llama models (using a big model to improve a small model), and the results are crazy as well:

Basically they were able to make a 32B model outperform GPT-4o on these benchmarks.

Another interesting tidbit, Deepseek isn’t even a company, it’s a lab owned by a hedge fund called High-Flyer. The funniest tweet was the devs saying that this was a side project:

Finally, the other crazy fact was that it cost less than $6M to train this model. That’s right, we have news like Stargate which will try to raise as much as $500B yet this model, yet even against the multi billion dollar data centers this model was on par or better than all of them. How is this possible? According to the stats we have so far:

It’s performing as well or better as the currently available models

It’s many times cheaper to run

It was many times cheaper to train

Is the only AI lab generating this magnitude of progress without raising any funds

It’s free and open source, MIT license (anyone can run it)

There’s a lot of skepticism here, Scale AI CEO said they used 50k H100s (much more than $6M). They also could have had a massive stockpile that was used for previous financial modeling and crypto mining (let’s not even talk about how they snuck in H100s). Also, the model is “today’s” model, OpenAI clearly has o3 coming, and rumors are that Anthropic is coming out with one too. So how did they do this?

One theory is that they are just really damn smart. They hired from the top 3 schools in China, which just in case you didn’t know, have an acceptance rate of 0.03-0.04%, which is much harder than the top schools in the US (not to discount our education system…). They aren’t actually PhD’s they are just very smart people that probably reverse engineered many of the breakthroughs of the current market, but somehow they caught up very quickly. My guess is they are also not constrained by data or other regulation, I tried the model out myself and can say that it actually does feel great, though we will probably need to run our own benchmarks internally.

I have to say, having DeepSeek R1 “publicly” show its chain of thought is fascinating:

You can see in these 14 seconds in addition to inference time, it sounds and thinks like a human. It goes through logic and even questions itself on facts. When I read this it almost sounded like me typing the finance parts of my newsletter out loud! The outputs are relatively boring in comparison. Now with the web interface, there are some issues with censorship, for example you cannot ask about Tiananmen Square, it actually stops itself the moment it starts thinking about it:

This apparently does not happen though on the open model. Okay, let’s step a bit into how R1 was trained:

Their first attempt was DeepSeek-R1-Zero, which applied large-scale reinforcement learning (RL) directly to the base model (DeepSeek-V3) without any supervised fine-tuning (SFT). You can think of the training with this format:

A conversation between User and Assistant. The user asks a question, and the Assistant solves it.

The assistant first thinks about the reasoning process in the mind and then provides the user

with the answer. The reasoning process and answer are enclosed within <think> </think> and

<answer> </answer> tags, respectively, i.e., <think> reasoning process here </think>

<answer> answer here </answer>. User: prompt. Assistant:

This is roughly following the “o1” and chain of thought format, there is a “thinking” section and an “answer” section. As with the o1 findings, extending the time for the “thinking” part creates better “answers”, but of course the user can only be so patient. What is interesting is that the model can determine how much time to think about the problem (and by re-evaluating as well). They call this the “aha moment”, and rather than do Reinforcement Learning by telling it the answer, the “aha moment” refers to the model suddenly learning to add more thinking time (or reevaluating steps) through fixed rule-based rewards. My brain does hurt a bit here thinking how they got this working.

But R1-Zero had pretty bad readability, so they had to do more work to make it more human-friendly…

So enter DeepSeek-R1, injecting better data to “cold start” the model. Basically this just means taking a lot of human-curated chain of thought examples, making them readable, and combining it with non-reasoning data from DeepSeek V3, then fine tuning the model so that it has more good “problem solving” information.

Whew, so R1 gets created, it now has reasoning AND readability, making it great to release to the public. But as we said above, they didn’t stop there! They fine tuned smaller models with 800k samples from R1. Their main experiment now was whether the techniques above (reinforcement learning) were more powerful than distillation. It turns out it would be way more effective to use distillation to improve smaller models with way less computation.

Also finally noting, although there were 18 core contributors to the DeepSeek R1 paper, in actuality there were 197 total contributors, there is actually a very large team behind R1.

Just to summarize on the breakthroughs here:

DeepSeek used reinforcement learning without supervised fine tuning (RL vs SFT), this allowed them to scale very quickly

Group Relative Policy Optimization (GRPO) makes AI training cheaper by grouping actions and using averages, skipping complex scoring steps.

Rule based rewards, whereas others have been using preference based rewards, this made it focus and “think” much more accurately. Also the rules were targeted towards reasoning benchmarks, not just “being right”

Cold start, which means multiple steps in training by adding quality data, then do RL

Distillation from R1 to smaller models to improve their quality

And maybe underrated was that they probably had a lot more Chinese data but were able to fix this with a language consistency reward when they jumped from R1-zero to R1

I appreciate that in the paper DeepSeek also mentioned where things didn’t work as well, I don’t think most labs would reveal that information. Given these breakthroughs, the DeepSeek team was able to scale with much less compute and much less headcount, and they have shared all the findings.

Now let’s think about the ramifications here. If an open source o1 quality model of this caliber is out now, it will quickly be available to many API providers who will drop the cost quickly to compete. This could also bring down the price of frontier model providers like OpenAI and Anthropic, in fact Meta is likely wondering if Llama 4 is even at the same level as DeepSeek right now. Worse yet, what if these models start outperforming premium model providers and get offered at a fraction of the price? This could truly disrupt the AI industry in terms of AI spend versus return on investment. It could also make billions of capex cost wasted if a company trains a model but the free ones turn out to be better.

(Data Centers in 10 years, though probably not inside a city)

That’s assuming of course DeepSeek remains open source. Meta was always a player here but DeepSeek just shows that it could be more and more common that open source catches up to frontier models. Now with the breakthroughs we talked about earlier, there are probably smaller teams with smaller clusters that can compete at the same level as the billion dollar clusters as more breakthroughs are discovered.

I also have to add here, so far using DeepSeek R1 has been a joy. It has brought a 5th option of cycling between ChatGPT, Claude, Gemini, Perplexity, it’s honestly getting hard to pick which provider to use for different use cases.

In summary, while the evals are still coming in, it does look like DeepSeek R1 is potentially as powerful as o1, but free and open sourced. It was much cheaper to train, much cheaper to run, and proves to the world that DeepSeek is to be taken seriously. Not only that, China is also to be taken seriously. Despite export controls to prevent them from gaining access to our most powerful GPUs, they are somehow still pushing out models that are just as good. Even during this hype cycle, ByteDance also released an o1 level model called Doubao 1.5 Pro. What is the point of these sanctions if they are able to lead the AI race? Maybe slow them down? But stepping back, the whole AI industry needs to rethink how pricing these models will occur now with these advancements. It may affect the return on investment for larger players, or it may enable smaller players to spend less but become more effective. With this trajectory, we should see quite a few sub $10B companies become part of the top 10 premium model creators within the next year…that would be quite something.